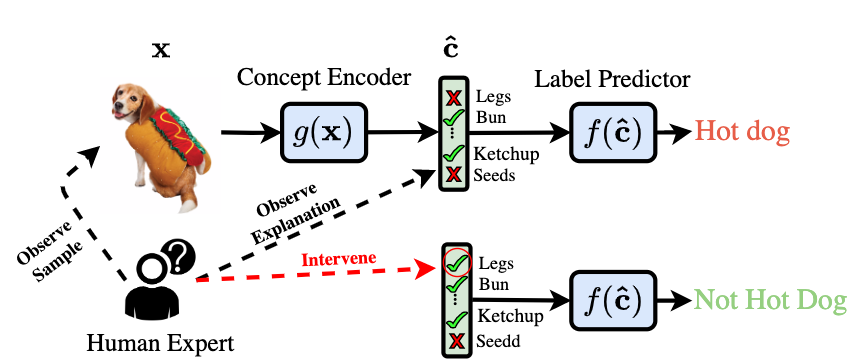

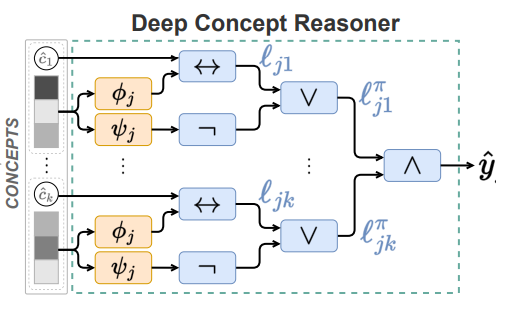

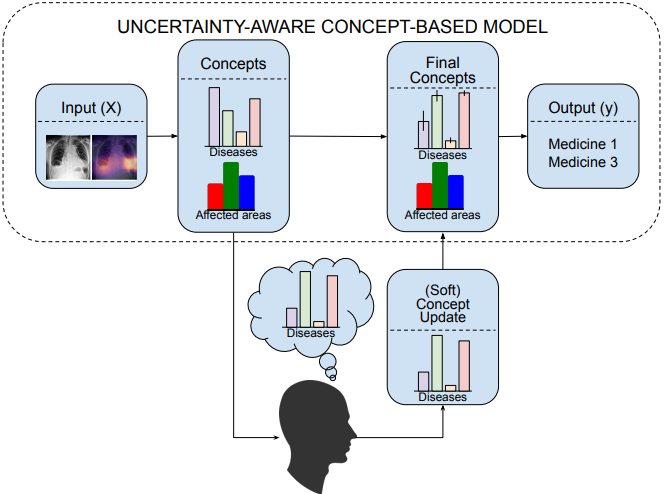

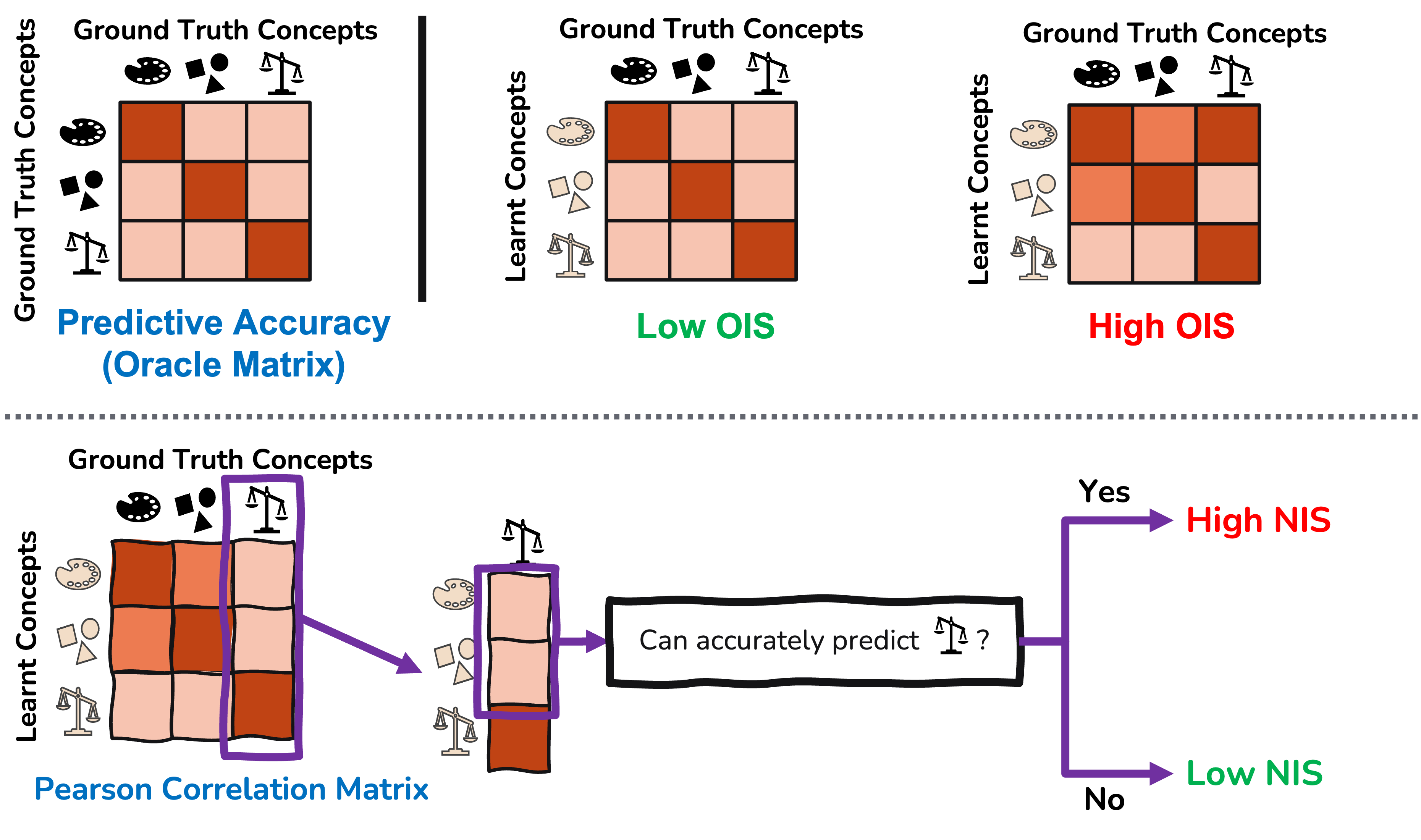

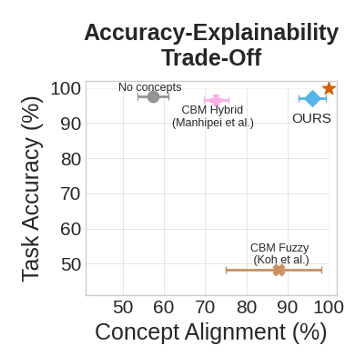

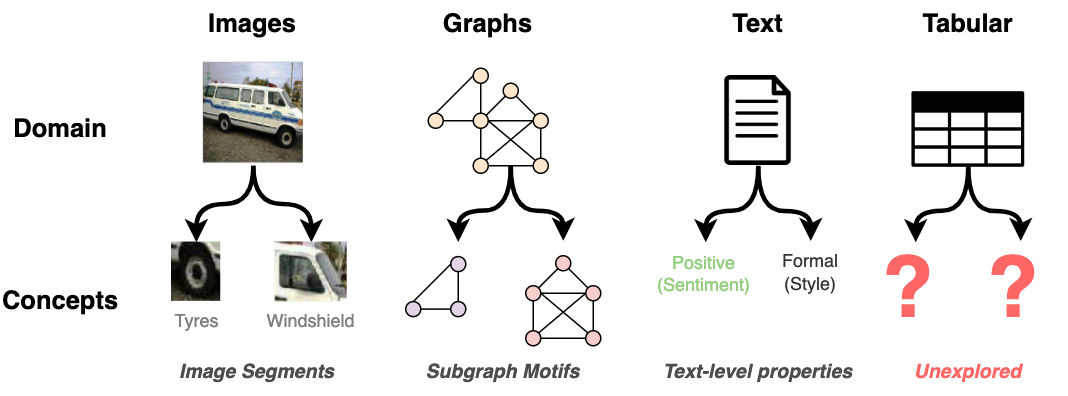

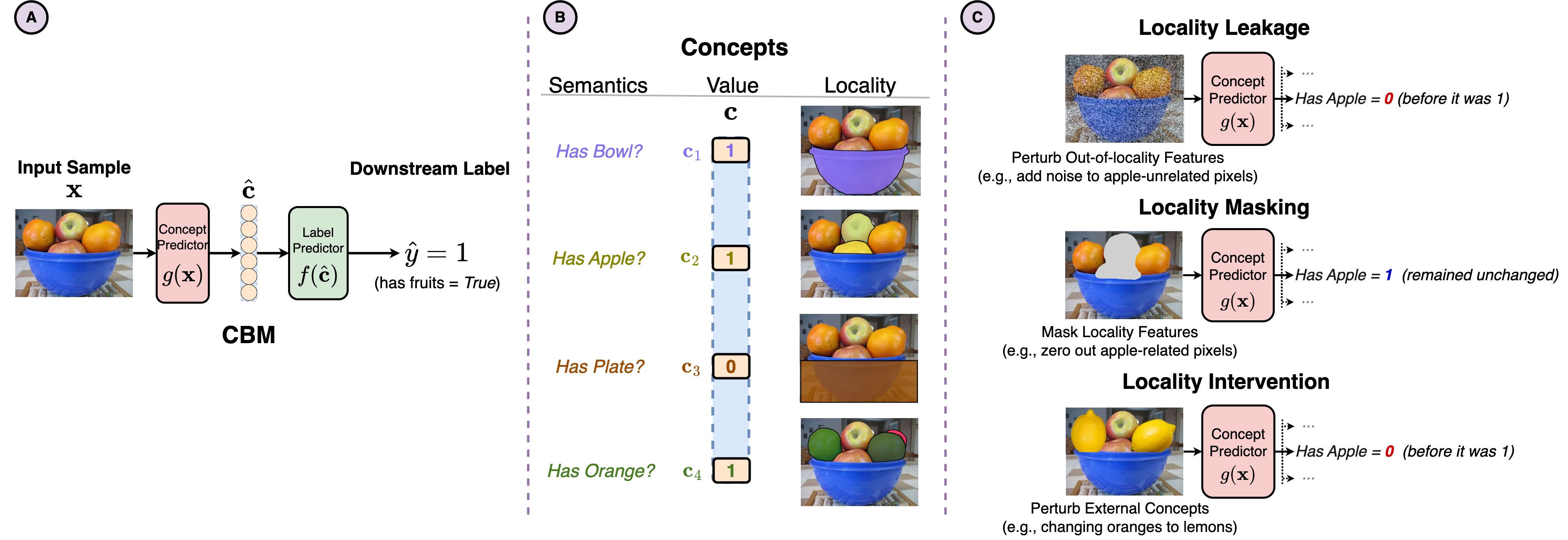

My current research interests roughly lie on the intersection of explainable AI, representation learning, and human-in-the-loop AI. More specifically, I am interested in (1) the design of powerful models that can construct explanations for their predictions in terms of high-level “concepts” and (2) the broad applications that these architectures may have in scenarios where experts can interact with the models at test time. For some more details on the general direction of my research, please refer to my Gates Cambridge scholar profile.

Below you can find a list of some of my publications, including their respective venues, papers, code, and presentations (when applicable). For a possibly more up-to-date list, however, please refer to my Google Scholar profile.